Coté

Eventually, to do a developer strategy your execs have to take a leap of faith

I’ve talked with an old colleague about pitching a developer-based strategy recently. They’re trying to convince their management chain to pay attention to developers to move their infrastructure sales. There’s a huge amount of “proof” an arguments you can make to do this, but my experience in these kinds of projects has taught me that, eventually, the executive in charge just has to take a leap of faith. There’s no perfect slide that proves developers matter. As with all great strategies, there’s a stack of work, but the final call has to be pure judgement, a leap of faith.

“Why are they using Amazon instead of our multi-billion dollar suite?”

You know the story. Many of the folks in the IT vendor world have had a great, multi-decade run in selling infrastructure (hardware and software). All the sudden (well, starting about ten years ago), this cloud stuff comes along, and then things look weird. Why aren’t they just using our products? To cap it off, you have Apple in mobile just screwing the crap out of the analogous incumbents there.

But, in cloud, if you’re not the leaders, you’re obsessed with appealing to developers and operators. You know you can have a “go up the elevator” sale (sell to executives who mandate the use of technology), but you also see “down the elevator” people helping or hindering here. People complain about that SOAP interface, for some reason they like Docker before it’s even GA’ed, and they keep using these free tools instead of buying yours.

It’s not always the case that appealing to the “coal-facers” (developers and operators) is helpful, but chances are high that if you’re in the infrastructure part of the IT vendor world, you should think about it.

So, you have The Big Meeting. You lay out some charts, probably reference RedMonk here and there. And then the executive(s) still isn’t convinced. “Meh,” as one systems management vendor exec said to me most recently, “everyone knows developers don’t pay for anything.” And then, that’s the end.

There is no smoking gun

If you can’t use Microsoft, IBM, Apple, and open source itself (developers like it not just because it’s free, but because they actually like the tools!) as historic proof, you’re sort of lost. Perhaps someone has worked out a good, management consultant strategy-toned “lessons learned” from those companies, but I’ve never seen it. And believe me, I’ve spent months looking when I was at Dell working on strategy. Stephen O’Grady’s The New Kingmakers is great and has all the material, but it’s not in that much needed management consulting tone/style. (I’m ashamed to admit I haven’t read his most recent book yet, maybe there’s some in there.)

Of course, if Microsoft and Apple don’t work out as examples of “leaders,” don’t even think of deploying all the whacky consumer-space folks out like Twitter and Facebook, or something as detailed as Hudson/Jenkins or Oracle DB/MySQL/MariaDB.

I think SolarWinds might be an interesting example, and if Dell can figure out applying that model to their Software Group, it’d make a good case study. Both of these are not “developer” stories, but “operator” ones; same structural strategy.

Eventually, they just have to “get it”

All of this has lead me to believe that, eventually, the executives have to just take a leap of faith and “get it.” There’s only so much work you can do — slides and meetings — before you’re wasting your time if that epiphany doesn’t happen.

The transformation is complete.

If this is your bag, come check out a panel on the developer relations at the OpenStack Summit on April 28th, in Austin — I’ll be moderating it!

So you want to become a software company? 7 tips to not screw it up.

Hey, I’ve not only seen this movie before, I did some script treatments:

Chief Executive Officer John Chambers is aggressively pursuing software takeovers as he seeks to turn a company once known for Internet plumbing products such as routers into the world’s No. 1 information-technology company. … Cisco is primarily targeting developers of security, data-analysis and collaboration tools, as well as cloud-related technology, Chambers said in an interview last month.

Good for them. Cisco has consistently done a good job to fill out its portfolio and is far from the one-trick pony people think it is (last I checked, they do well with converged infrastructure, or integrated systems, or whatever we’re supposed to call it now). They actually have a (clearly from lack of mention in this piece) little known-about software portfolio already.

In case anyone’s interested, here’s some tips:

1.) Don’t buy already successful companies, they’ll soon be old tired companies

Software follows a strange loop. Unlike hardware where (more or less) we keep making the same products better, in software we like to re-write the same old things every five years or so, throwing out any “winners” from the previous regime. Examples here are APM, middleware, analytics, CRM, web browsers…well…every category except maybe Microsoft Office (even that is going bonkers in the email and calendaring space, and you can see Microsoft “re-writing” there as well [at last, thankfully]). You want to buy, likely, mid-stage startups that have proven that their product works and is needed in the market. They’ve found the new job to be done (or the old one and are re-writing the code for it!) and have a solid code-base, go-to-market, and essentially just need access to your massive resources (money, people, access to customers, and time) to grow revenue. Buy new things (which implies you can spot old vs. new things).

2.) Get ready to pay a huge multiple

When you identify a “new thing” you’re going to pay a huge multiple of 5x, 10x, 20x, even more. You’re going to think that’s absurd and that you can find a better deal (TIBCO, Magic, Actuate, etc.). Trust me, in software there are no “good deals” (except once in a lifetime buys like the firesale fro Remedy). You don’t walk into Tiffany’s and think you’re going to get a good deal, you think you’re going to make your spouse happy.

3.) “Drag” and “Synergies” are Christmas ponies

That is, they’re not gonna happen on any scale that helps make the business case, move on. The effort it takes to “integrate” products and, more importantly, strategy and go-to-market, together to enable these dreams of a “portfolio” is massive and often doesn’t pan out. Are the products written in exactly the same programming language, using exactly the same frameworks and runtimes? Unless you’re Microsoft buying a .Net-based company, the answer is usually “hell no!” Any business “synergies” are equally troublesome: unless they already exist (IBM is good at buying small and mid-companies who have proven out synergies by being long-time partners), it’s a long-shot that you’re going to create any synergies. Evaluate software assets on their own, stand-alone, not as fitting into a portfolio. You’ve been warned.

4.) Educate your sales force. No, really. REALLY!

You’re thinking your sales force is going to help you sell these new products. They “go up the elevator” instead of down so will easily move these new SKUs. Yeah, good luck, buddy. Sales people aren’t that quick to learn (not because they’re dumb, at all, but because that’s not what you pay and train them for). You’ll need to spend a lot of time educating them and also your field engineers. Your sales force will be one of your biggest assets (something the acquired company didn’t have) so baby them and treat them well. Train them.

5.) Start working, now, on creating a software culture, not acquiring one

The business and processes (“culture”) of software is very different and particular. Do you have free coffee? Better get it. (And if that seems absurd to you, my point is proven.) Do you get excited about ideas like “fail fast”? Study and understand how software businesses run and what they do to attract and retain talent. We still don’t really understand how it all works after all these years and that’s the point: it’s weird. There are great people (like my friend Israel Gat) who can help you, there’s good philosophy too: go read all of Joel’s early writing of Joel’s as a start, don’t let yourself get too distracted by Paul Graham (his is more about software culture for startups, who you are not — Graham-think is about creating large valuations, _not_ extracting large profits), and just keep learning. I still don’t know how it works or I’d be pointing you to the right URL. Just like with the software itself, we completely forget and re-write the culture of software canon about every five years. Good on us. Andrew has a good check-point from a few years ago that’s worth watching a few times.

6.) Read and understand Escape Velocity

This is the only book I’ve ever read that describes what it’s like to be an “old” technology company and actually has practical advice on how to survive. Understand how the cash-cow cycle works and, more importantly for software, how to get senior leadership to support a cycle/culture of business renewal, not just customer renewal.

7.) There’s more, of course, but that’s a good start

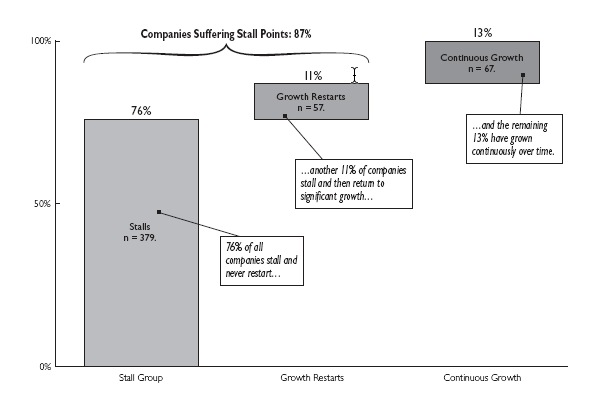

Finally, I spotted a reference to Stall Points in one of Chambers’ talks the other day which is encouraging. Here’s one of the better charts you can print out and put on your wall to look at while you’re taking a pee-break between meetings:

That charts all types of companies. It’s hard to renew yourself, it’s not going to be easy. Good luck!

The Problem with PaaS Market-sizing

Figuring out the market for PaaS has always been difficult. At the moment, I tend to estimate it at $20–25bn sometime in the future (5–10 years from now?) based on the model of converting the existing middleware and application development market. Sizing this market has been something of an annual bug-bear for me across my time at Dell doing cloud strategy, at 451 Research covering cloud, and now at Pivotal.

A bias against private PaaS

This number is in contrast to numbers you usually see in the single digit billions from analysts. Most analysts think of PaaS only as public PaaS, tracking just Force.com, Heroku, parts of AWS, Azure, and Google, and bunch of “Other.” This is mostly due, I think, to historical reasons: several years ago “private cloud” was seen as goofy and made-up, and I’ve found that many analysts still view it as such. Thus, their models started off being just public PaaS and have largely remained as so.

I was once a “public cloud bigot” myself, but having worked more closely with large organizations over the past five years, I now see that much of the spending on PaaS is on private PaaS. Indeed, if you look at the history of Pivotal Cloud Foundry, we didn’t start making major money until we gave customers what they wanted to buy: a private PaaS platform. The current product/market fit, then, for PaaS for large organizations seems to be private PaaS

(Of course, I’d suggest a wording change: when you end-up running your own PaaS you actually end-up running your own cloud and, thus, end up with a cloud platform. Also, things are getting even more ambiguous at the infrastructure layer all the time — perhaps “private PaaS” means more “owning” the PaaS layer, regardless of who “owns” the IaaS layer.)

How much do you have budgeted?

With this premise — that people want private PaaS — I then look at existing middleware and application development market-sizes. Recently, I’ve collected some figures for that:

- IDC’s Application Development forecast puts the application development market (which includes ALM tools and platforms) at $24bn in 2015, growing to $30bn in 2019. The commentary notes that the influence of PaaS will drive much growth here.

- Recently from Ovum: “Ovum forecasts the global spend on middleware software is expected to grow at a compound annual growth rate (CAGR) of 8.8 percent between 2014 and 2019, amounting to $US22.8 billion by end of 2019.”

- And there’s my old pull from a Goldman Sachs report that pulled from Gartner, where middleware is $24bn in 2015 (that’s from a Dec 2014 forecast).

When dealing with large numbers like this and so much speculation, I prefer ranges. Thus, the PaaS TAM I tent to use now-a-days is something like “it’s going after a $20–25bn market, you know, over the next 5 to 10 years.” That is, the pot of current money PaaS is looking to convert is somewhere in that range. That’s the amount of money organizations are currently willing to spend on this type of thing (middleware and application development) so it’s a good estimate of how much they’ll spend on a new type of this thing (PaaS) to help solve the same problems.

Things get slightly dicey depending on including databases, ALM tools, and the underlying virtualization and infrastructure software: some PaaSes include some, none, or all of these in their products. Databases are a huge market (~$40bn), as is virtualization (~$4.5bn). The other ancillary buckets are pretty small, relatively. I don’t think “PaaS” eats too much database, but probably some “virtualization.”

So, if you accept that PaaS is both public and private PaaS and that it’s going after the middleware and appdev market, it’s a lot more than a few billion dollars.

Addressing the DevOps compliance problem

Satisfying the mythical auditors is often one of the first barriers to spreading DevOps initiatives more widely inside an organization. While these process-driven barriers can be annoying and onerous, once you follow the DevOps tradition of empathetic inclusion — being all “one team” — they can not only stop slowing you down but actually help the overall quality of the product. Indeed, the very reason these audit checks were introduced in the first place was to ensure overall quality of the software and business. There’s some excellent, exhaustive overviews out there of dealing with audits and the like in DevOps. In this column, I wanted to go through a little mental re-orientation for how to start thinking about and approaching the “compliance problem.”

Three-Ring Binder Ninjas

In this context, I think of “auditors” as falling into the category of governance, risk and compliance (GRC) — any function that acts as a check on code as and how the code is produced and run as it goes through its lifecycle. I would put security in here as well, though that tends to be such a broad, important topic that it often warrants its own category (and the security people seem to like maintaining their occultic silo-tude, anyhow).

The GRC function(s) may impose self-created policies (like code and architectural review), third party and government imposed regulations (like industry standard compliance and laws such as HIPAA), and verification that risky behavior is being avoided (if you write the code, you can’t be the same person who then uses that code for cash payouts, perhaps, to yourself, for example). In all cases, “compliance” is there to ensure overall quality of the product and the process that created it. That “quality” may be the prevention of malicious and undesired behavior; that is, in a compliance-driven software development mindset, the ends rarely justify the means.

In many cases, the GRC function is more interested in proof that there is a process in place than actually auditing each execution of that process. This is a curious thing at first. Any developer knows that the proof is in the code, not the documentation. And, indeed, for some types of GRC the amount of automation that a DevOps mindset puts into place could likely improve the quality of GRC, ironically.

Establishing trust and automating compliance

Indeed, automation is one of the first areas to look at when reducing DevOps/GRC friction. First, treat complying with policies as you would any other feature. Describe it, prioritize it and track it. Once you have gotten your hands around it, you can start figure out how to best implement that “feature.” Ideally, you can code and automate your way out of having to do too much manual work.

There’s work being done in the US Federal government along these lines that’s helpful because it’s visible and at scale. First, as covered in a recent talk by Diego Lapiduz, part of what auditors are looking for is to trust the software and infrastructure stack that apps are running on. This is especially true from a security standpoint. The current way that software is spec’d out and developed in most organizations follows a certain “do whatever,” or even YOLO principal. App teams are allowed to specify which operating systems, orchestration layers and middleware components they want. This may be within an approved list of options, but more often than not it results in unique software stacks per application.

As outlined by Diego, this variation in the stack meant that government auditors had to review just about everything, taking up to months to approve even the simplest line of code. To solve this problem, 18F standardized on one stack — Cloud Foundry — to run applications on, not allowing for variance at the infrastructure layer. They then worked with the auditors to build trust in the platform. Then, when there was just the metaphoric or literal “one line of code” to deploy, auditors could focus on much less, certainly not the entire stack. This brought approval time down to just days. A huge speed up.

When it comes to all the paperwork, also look to ways to automate the generation of the needed listings of certifications and compliance artifacts. This shouldn’t be a process that’s done in opaque documents, nor manually, if at all possible. Just as we’d now recoil in horror at manually deploying software into production, we should try to achieve “compliance as code” that’s as autogenerated (but accurate!) as possible. To that end, the work being done in the OpenControl project is showing an interesting and likely helpful approach.

The lessons for DevOps teams here is clear: Standardize your stack as much as possible and work with auditors to build their trust in that platform. Also, look into how you can automate the generation of compliance documents beyond the usual .docx and .pptx suspects. This will help your GRC process move at DevOps speed. And it will also allow your auditors to still act as a third party governing your code. They’ll probably even do a better job if they have these new, smaller batches of changes to review.

Refactoring the compliance process

To address the compliance issue fully, you’ll need to start working with the actual compliance stakeholders directly to change the process. There’s a subtle point right there: Work with the people responsible for setting compliance, not those responsible for enforcing it, like IT. All too often, people in IT will take the strictest view of compliance rules, which results in saying “no” to virtually anything new — coupled with Larman’s Law, you’ll soon find that, mysteriously, nothing new ever happens and you’re back to the pre-DevOps speed of deployment, software quality levels and timelines. You can’t blame IT staff for being unimaginative here — they’re not experts in compliance and it’d be risky for them to imagine “workarounds.” So, when you’re looking to change your compliance process, make sure you’re including the actual auditors and policy setters in your conversations. If they’re not “in the room,” you’re likely wasting your time.

As an example, one of the common compliance problems is around “developers deploying to production.” In many cases and industries, a separation of duties is required between coding and deploying. When deploying code to production was a more manual, complicated process, this could be extremely onerous. But once deployments are push-button automated with a good continuous delivery pipeline,you might consider having the product manager or someone who hasn’t written code be the deployer. This ensures that you can “deploy at will,” but keeps the actual coders’ fingers off the button.

As another intriguing compliance strategy, suggested by Home Depot’s Tony McCulley (who also suggested the above approach to the separation of duties) is to give GRC staff access to your continuous delivery process and deployment environment. This means instead of having to answer questions and check for controls for them, you can allow GRC staff to just do it on their own. Effectively, you’re letting GRC staff peer into and even help out with controls in your software. I’d argue that this only works if you have a well-structured platform supporting your CD pipeline with good UIs that non-technical staff can access.

It might be a bit of a stretch, but inviting your GRC people into your DevOps world, especially early on, may be your best bet at preventing compliance slowdowns. And, if there’s any core lesson of DevOps, it’s that the real problems are not in the software or hardware, but the meatware. Figuring out how to work better with the people involved will go a long way towards addressing the compliance problem.

(I originally wrote this December 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

All the taboos about working at home

Working at home, with a family, is a challenge, as this nice overview piece at The Register goes over. You think you’re trading all those interruptions from co-workers talking about the sportsball or just complaining about the daily grind, but you’re actually trading in for a different set of co-workers, your family. And their requests for your attention are harder to stonewall than chatty cube-mates.

And then there’s the whole “out of site, out of mind” effect with management at work. I’ve worked at home on and off (mostly at home) over the past decade and it has it’s challenges. I lead a public enough work-life, along with remote working aware folks, that Management forgetting about me rarely comes up. However, as my kids have grown up and there’s, consequently, more going on at home, figuring out how to shut-out my family is a constant challenge. You see, that’s the taboo part! “Shut-out” - you could say “manage” or all sorts of things, but if you follow the maker/manager mentality that most individual contributor (non-managers) knowledge workers must, you have to shut people (“distractions”) out.

Achieving flow considered a luxury

On the other hand, this “flow” is a luxury us privileged folks have been experiencing for a long time:

What I didn’t know at the time was that this is what time is like for most women: fragmented, interrupted by child care and housework. Whatever leisure time they have is often devoted to what others want to do – particularly the kids – and making sure everyone else is happy doing it. Often women are so preoccupied by all the other stuff that needs doing – worrying about the carpool, whether there’s anything in the fridge to cook for dinner – that the time itself is what sociologists call “contaminated.”

I came to learn that women have never had a history or culture of leisure. (Unless you were a nun, one researcher later told me.) That from the dawn of humanity, high status men, removed from the drudge work of life, have enjoyed long, uninterrupted hours of leisure. And in that time, they created art, philosophy, literature, they made scientific discoveries and sank into what psychologists call the peak human experience of flow.

Women aren’t expected to flow.

It’s like there’s a maker/manager/_mother_ time management paradigm. (Speaking of that privilege: here I am, with time to type this very post.)

Context switch like Nietzsche

What I’ve been doing is tying to reprogram my mind to think in slices of time fragments and to gorge on 60 minute time spans when they come up. I recall learning that one of the reasons Nietzsche wrote so many aphorisms was because he didn’t have time to write longer pieces; his chronic sickness conditions (whatever they were) gave him little “flow” time.

When I shifted to work at Dell and was on the road the at 451 Research, I was similarly afflicted with fragmented time (at Dell, you’d be in meetings all day because that’s how things ran). I remember one time when I was 451 Research I’d been trying to finish a piece on SUSE and was walking down a ponderously long casino hallway: I just stopped, pulled out my laptop, and started typing for about ten minutes. Finding those little slices that adds up to a full 90 to 120 minutes is hard…but, at least with non-programming knowledge work, you can get over the tax of context switcthing enough to make it worth it.

However, this is all within a large context: the computer. All of that partial attention swapping on the Internet over these years has helpfed warp my brain to work in fragments, but now I need to train my mind to swap between computer and “real life.” So far, it’s slow going.

Resisting the shut-out

All of this on the other hand, I really value working from home. I enjoy seeing my kids and wife all day long (so much more so than all those random run-ins with people in the office). I like being in my own environment, being able to eat at home, and on those rare occasions when I’m in a boring, useless, but obligatory meeting, doing something more useful with my time as I listen in. I have one of the better situations I’ve ever had at work right now: everyone on my team, including my boss, is remote. This means we all know the drill, use the tools, and coordinate.

As my wife is fond of telling me, I should just lock my office door more, which is true. The other part that you, as a remote worker, have to program your brain for is: you’re going to be interrupted while you’re in “flow” a lot. Just accept it. In the office there’s plenty of fire-alarms, going to lunch, people stopping by your desk, and so on. We can’t all be on the flat food diet. My other bit of advice is to take advantage of being at home and a flexible work schedule to do more with your family. If you’re like me, you travel a fair amount as well. So just as I have to gobble up every long span of time greedily, when I’m home and have the chance to do things with family, I try to.

Barriers to DevOps in government

There’s just as much pull for DevOps in government as there is in the private sector. While most of our focus around adoption is on how businesses can and are using DevOps and continuous delivery, supported by cloud, to create better software, many government agencies are in the same position and would benefit greatly from figuring out how to apply DevOps in their organizations.

Just 13% of respondents in a recent MeriTalk/Accenture survey of 152 US Federal IT managers believed they could “develop and deploy new systems as fast as the mission requires.” The impact of improving on that could be huge. For example, the US Federal government, by conservative estimates, spends $84 billion a year on IT. And yet, the Standish Group believes that 94% of government IT projects fail. These are huge numbers that, with even small improvements, can have massive impact. And that’s before even considering the benefits of simply improving the quality of software used to provide government services.

As with any organization, the first filter for applicability is whether or not the government organization is using custom written software to accomplish it’s goals. If all the organization is doing is managing desktops, mobile, and packaged software, it’s likely that just SaaS and BYOD are the important areas to focus on. DevOps doesn’t really apply, unless there’s software being written and deployed in your organization or, as is more common in government agencies, for your organization as we’ll get to when we discuss “contractors.”

When it comes to adopting and being successful with DevOps, the game isn’t too different than in the business world: much of the change will have to do with changing your organization’s process and “culture,” as well as adopting new tools that automate much of what was previously manual. You’ll still need to actually take advantage of the feedback loop that helps you improve the quality of your software, in respect to defect, performance in production, and design quality. There are a few things that tend to be more common in government organizations that bear some discussion: having to cut through red-tape, dealing with contractors, and a focus on budget.

Living with red-tape

While “enterprise” IT management tasks can be onerous and full of change review boards and process, government organizations seem to have mastered the art of paperwork, three ring binders, and red tape in IT. As an example, in the US Federal government, any change needs to achieve “Authority To Operate” which includes updating the runbook covering numerous failure conditions, certifying security, and otherwise documenting every aspect of the change in, to the DevOps minded, infinitesimal detail. And why not? When was the last time your government “failed fast” and you said “gosh, I guess they’re learning and innovating! I hope they fail again!” No, indeed. Governments are given little leash for failure and when things go terribly wrong, you don’t just get a tongue lashing from your boss, but you might get to go talk to Congress and not in the fun, field-trip how a bill is made kind of way. Being less cynical, in the military, intelligence, and law enforcement parts of government, if things go wrong more terrible things than denying you the ability to upload a picture of your pot roast to Instagram can happen. It’s understandable — perhaps, “explainable” — that government IT would be wrapped up in red-tape.

However, when trying to get the benefits of continuous delivery, DevOps, and cloud (or “cloud native” as that tryptic of buzzwords is coming to be known), government organizations have been demonstrating that the comforting mantle of red-tape can be stripped. For example, in the GSA, the 18F group has reduced the time it takes to get a change through from 9–14 months to just two to three days.

They achieved this because now when they deploy applications on their cloud native platform (a Cloud Foundry instance that they run on Amazon Web Services) they are only changing the application, not the whole stack of software and hardware below the application layer. This means they don’t need to re-certify the he middleware, runtimes and development frameworks, let alone the entire cloud platform, operating systems used, networking, hardware, and security configurations. Of course, the new lines of application code need to be checked, but because they’re following the small batch principles of continuous delivery, those net-new lines are few.

The lesson here is that you’ll need to get your change review process — the red-tape spinners — to trust the standard cloud platform you’re deploying your applications on. There could be numerous ways to do this from using a widely used cloud platform like Cloud Foundry, building up trusted automation build processes, or creating your own platform and software release pipelines that are trusted by your red-tape mavens.

Contractors & Lost Competency

If you want to get staff in a government IT department ranting at you all night long, ask them about contractors. They loathe them and despise them and will tell you that they’re “killing” government IT. Their complaints is that contractors cannot structurally deal with an Agile mentality that refuses to lock-down a full list of features that will be delivered on a specific date. As you shift to not even a “DevOps mindset,” but an Agile mindset where the product team is more discovering with each iteration what the product will be and how to best implement it, you need the ability to change scope throughout the project as you learn and adapt. There is no “fail fast” (read: learning) when the deliverables 12 months out are defined in a 300 page document that took 3–6 months to scope and define.

Once again, getting into this state is likely explainable: it’s not so much that any actor is responsible, it’s more that the management in government IT departments is now responsible to fix the problem. The problem is more than a square peg (waterfall mentalities from contractors) in a round-hole (government IT departments that want to be more Agile) issue. After several decades of outsourcing to contractors, there’s also a skills and cultural gap in the IT departments. Just as custom written software is becoming strategically important to more organizations, many large IT departments find themselves with little experience and even less skill when it comes to software and product development. I hear these same complaints frequently from the private sector who’ve outsourced IT for many years, if not decades.

The Agile community has long discussed this problem and there are always interesting, novel efforts to get back to insourcing. A huge part is simply getting the terms of outsourcing agreements to be more compatible. The flip-side of this is simplifying the process to become a government contractor: it’s sure not easy at the moment. Many of the newer, more Agile and DevOps minded contractors are smaller shops that will find the prospect of working with the government daunting and, well, less profitable than working with other organizations. Making it easier for more shops to sign up will introduce more competitions rather than the more limited strangle-hold by paperwork, smaller market that exists now. The current pool of government contractors seems mostly dominated by larger shops that can navigate the government procurement process and seem to, for whatever reason, be the ones who are the most inflexible and waterfall-y.

Another part is refusing to ceed project management and scoping management to external partiers; and, making sure you have the appropriate skills in-house to do so. Finally, the management layers in both public and private sector need to recognize this as a gap that needs to be filled and start recruiting more in-house talent. Otherwise, the highly integrated state of DevOps — let alone a product focus vs. a project focus — will be very hard to achieve.

Addressing budgetary concerns with waste removal

Every organization faces budget problems. We call them “unicorns” because they have this mythical quality of seemingly unlimited budget. The spiral horn-festooned are the exception that proves the rule that all organizations are expected to spend money wisely. Government, however, seems to operate in a permanent state of shrinking IT budgets. And even when government organizations experience the rare influx of cash, there’s hyper-scrutiny on how it’s spent. To me, the difference is that private sector companies can justify spending “a lot” of money if “a lot” of profit results, where-as government organizations don’t find such calculations as easily. Effectively, government IT departments have to prove that they’re spending only as much money as necessary and strategically plan to have their budget stripped down in each budgetary cycle.

Here, the Lean-think part of DevOps can actually be very helpful and, indeed, may become a core motivation for government to look to DevOps. My simplification of the goals of DevOps are to:

- Ensure that the software has good availability (which it does by focusing on resilience vs. perfection, the ability to recover from failure quickly rather than avoiding all failure by rarely changing anything). This is something that recent failures in US Federal government IT can appreciate.

- Enable the weekly, if not daily, deployment of new code into production with continuous delivery. The goal here is to improve the quality of the software, both bugs and “design” quality, ensuring that the software is what users actually want by iterating over features frequently.

Those two goals end up working harmoniously together (with smaller batches of code deployed more frequently, you reduce the risk of each causing major downtime, for example). For government organizations focused on “budget,” the focus on removing as much “waste” from the system to speed up the delivery cycle starts to look very attractive for the cost-cutting minded. A well functioning DevOps shop will spend much time analyzing the entire, end-to-end cycle with value-stream mapping, stripping out all the “stupid” from the process. The intention of removing waste in DevOps think is more about speeding up the software release process and helping ensure better resilience in production, but a “side effect” can be removing costs from the system.

Often, in the private sector we say that resources (time, money, and organization attention) saved in this process can be reallocated to helping grow the business. This is certainly the case in government, where “the business” is, of course, understood not as seeking profits but delivering government services and fulfilling “mission” requirements. However, simply reducing costs by finding and removing unneeded “waste” may be an highly attractive outcome of adopting DevOps for governments.

“Bureaucracy” doesn’t have to be a bad word

As with any large organization, governments can be horrendous bureaucracies. Pulling out the DevOps empathy card, it’s easy to understand why people in such government bureaucracies can start to stagnate and calcify, themselves becoming grit in the gears of change if not outright monkey-wrenches.

In particular, there are two mind-sets that need to change as government staff adopt DevOps:

- Analysis paralysis — The almost default impulse to over analyze and specify with ponderous, multi-100 page documents the shifting to a more Agile and DevOps mindset. A large part of the magic of DevOps and Agile think is avoiding analysis paralysis and learning by doing rather than thinking in .docx. Government teams not familiar with smaller batch, experiment-based approaches to software development would do well to read up on Lean Startup think, perhaps checking out Lean Enterprise for a compendium of current best practices and, well, mindsets.

- Stagnant minds — large organizations, particularly government ones, can breed a certain learned helplessness and even laziness in individuals. If things are slow moving, impossible to change, and managed in a tall blade of grass gets cut style, individuals will tune out rapidly. If DevOps is understood as a practice to help jump-start all too slow IT organizations, it’ll often be the case that individuals in that organization are in this stagnated mindset. One of the key challenges becomes inspiring and then motivating staff to care enough to try something new and stick with it.

Again, these problems frequently happen in the private sector. But, they seem to be larger problems in government that bear closer attention. Thankfully, it seems like leaders in government know this: in a recent Gartner, global survey, 40% of government CIOs said they needed to focus more on developing and communicating their vision and do more coaching. In contrast, 60% said they needed to reduce the time spent in command-and-control mode. Leading, rather than just managing, the IT department, as ever, is key to the transformative use of IT.

More than rats dragging pizza

In any given time, it’s easy to be dismissive of government as wasteful and even incompetent. That’s the case in the U.S. at least, if you can judge based on the many politicians who seem to center their political campaigns around the idea of government waste. In contrast, we praise the private sector for their ability to wield IT to…better target ads to get us to buy sugar coated corn flakes. Don’t get me wrong, I’m part of the private sector and I like my role chasing profit. But we in the “enterprise” who are busy roaming the halls of capitalism don’t often get the chance to positively effect, let alone simply help and improve the lives of, everyone on a daily basis. Government has that chance and when you speak with most people who are passionate about using IT better in government, they want to do it because they are morally motivated to help society.

The benefits of adopting DevOps have been clearly demonstrated in recent years, and for businesses we’re seeing truth in the statement that you’re either becoming a software organization or losing to someone who is. As government organizations start to think about improving how they do IT, they have the chance to help all of us, “winning” isn’t zero-sum like it can be in the business world. To that end, as we in the industry find new, better ways to create and deliver software, it behoves us to figure out how government can benefit as well. That’ll get us a even closer towards making software suck less something we’ll all benefit from.

(I originally wrote this September 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

Management’s role in DevOps: orchestrating the why

What’s the point of it all? Why are we doing this? These questions pop up frequently in IT teams where the reason for doing your daily activities — like churning through tickets, whizzing up builds, or “doing the DevOps” — seems only that someone, somewhere told you to do it.

If you’re in this situation — you have no idea how your activities are helping your organization make money — you should stop and find out quickly what your company’s goals and strategies are to make sure you’re not wasting time. The good news is the confusion is probably not your fault; the bad news is that you’ll have to convince management that the fault is theirs.

Gratuitous optimization by technology

The adoption of things like DevOps or the cloud sometimes happens for wrong or unknown reasons — gratuitous plans without a tight connection to business goals. We used to call this “management by magazine,” and it happens now more than ever. A process — even “cultural” — change like DevOps is not like the easy improvement fodder of virtualization. But you can’t blame IT management for trying gratuitous optimization by technology. The magic of VMware was that you just installed it, and things got better because it improved resource utilization. You didn’t need to figure out why or match it to goals. If you inject DevOps into an organization expecting it to just improve things without tightly coupling to strategy, you’ll get weird results. You’ll probably just create more work!

If you don’t know where you are going, any road will get you there

Agile, DevOps, and now “cloud native” (I hope you’re updating your buzzword lexicons!) need strong connections to the business goals — some would say “strategy” — to be successful. In order to operate in a lean fashion, you want to only do things that are valuable and useful to the customer (or obligatory to stay in business, like compliance and auditability). Indeed, being able to sort out what’s valuable and useful to the business is a key tool for doing DevOps successfully. You want to cut out all the stuff that doesn’t matter, or at least minimize it. Otherwise, you just sort of do everything and anything because there’s no way to determine if any given activity is helpful.

So how do you align your work with the overall business strategy?

There are tried and true (though seemingly new to the IT department) techniques like value-stream mapping: take any given business process and map out all the activities that happen from end-to-end, questioning if each is needed. Most people are shocked at how much “stupid” is going on in such maps and it’s a great technique for finding and removing bottlenecks.

If you’re in the consumer business — like so many “unicorns” are — it’s easy to understand the mission and the goals: get more people buying books, downloading your app, streaming more videos, and so forth. But in other, more traditional settings, it’s common to find a willful disentanglement between how IT is used and how it contributes to customer value. More than not, the stasis-inducing ludlum of time and success just numbs people’s collective minds and sets them into auto-pilot here.

You see this happen most often around decision making processes in business: things that need approval, planning processes and market assessments. People in large companies love cogitating and wrapping process around activities that cause change in the company; it feels like they almost like to slow down change and activity. You might even codify in a whole process with change review board meetings and careful inspection of all the changed components by a panel of architectural and security audit wizards.

Cultivating complainers

You can also identify where your processes aren’t matching with business goals and strategies by cultivating squeaky wheels.

When change happens, individuals often pipe up asking, “Why are we doing this? Why is this valuable to the customer?” More than likely, they’re seen as troublemakers or sand in the gears, and are shut down by the group, Five Monkeys style. At best, these individuals cope with learned helplessness; at worst, they leave, kicking off a sort of Idiocracy effect in the remaining organization.

These “complainers” are actually a valuable source of data for testing out how well understood a company’s goals and strategies are. You want to court these types of people to continually test out how effective the organization is at setting goals and strategy. One fun practice, as mentioned by Ticketmaster’s Jody Mulkey, is to interview new employees a month after starting to ask them what seems “screwy around here” before they get used to it.

Blame management

So what do you do when they or any other process you’ve tried identify real disconnects between what you’re doing and why? The fun begins — because it’s management’s job to fix this bug. The role of mid- and upper-level management in the cloud native era is poorly understood and documented (its always been so, of course, in creative-driven endeavors like software). To be successful at these types of initiatives, management has a lot of work to do and the managers who are overseeing DevOps teams can’t assume things will just proceed as normal. This is why, as with software, you need to continually test the assumption that people know the business goals and strategy.

This point has been stuck in my brain after reading Leading the Transformation (an excellent book for managers figuring out how to apply DevOps “in the large”), which states the point more plainly than I can:

Management needs to establish strategic objectives that make sense and that can be used to drive plans and track progress at the enterprise level. These should include key deliverables for the business and process changes for improving the effectiveness of the organization.

What I like about this advice (and the rest in the book) is that it’s geared to defining management’s job in all this DevOps hoopla. In said hoopla, we spend a lot of time on what the team does, but we don’t spend too much time on what management should do. It’s lovely thinking about flattening the organization and having everyone act as responsible peers, but in large organizations, this isn’t done easily or quickly. Just as with all those zippy containers, you need an orchestration layer to make sense of all the DevOps teams working together. That’s the job of management in the cloud native era: setting goals and orchestrating all the teams to make sure they’re all pulling in the right direction.

(I originally wrote this August 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

There’s no easy way to model DevOps ROI

Think you can show DevOps ROI? Think again

“What is the ROI for DevOps?” is a question that has been tossed my way frequently of late. There are numerous reasons why this is at the same time an absurd but also important question.

Modeling DevOps ROI is absurd because predicting the gains and costs of a process, let alone one as new as DevOps, is difficult and dependent on all sorts of unique variables per organization.

However, thinking through DevOps ROI is an important step for adoption because the promises of DevOps are so grandiose and the changes needed sound large and almost impossible to achieve for “normal” people.

That is, DevOps is an unmeasurable process with respect to ROI (it has value, to be sure, but is nearly impossible to measure independently and precisely) and, yet, because “doing DevOps” seems to be such a big change, organizations need assurances that transformation will be “worth it.”

So, if you’re asked to help show the ROI for DevOps, what can you do? Let’s cover three ways to approach the problem. I don’t think any of them are a real answer, but they get closer to satisfying some possible motivations for asking for ROI in the first place.

What is ROI?

First, what is ROI? I misuse economic and accounting terms all the time, but I think of “ROI” as showing the profit you achieve after a given period of time, for our purposes, by doing something new and different with IT: you might buy some new software (running on a cloud platform like Pivotal Cloud Foundry instead of just IaaS), do your software development and delivery differently (like, “doing DevOps”), and so forth.

With ROI, you’re not only interested in the question “does it work,” you’re interested in the question “did this make me money?” Oftentimes, you’re also interested in comparing the costs of competing approaches, or just inflicting vendors with the thrill of “bake offs” and ROI spreadsheet fights.

To figure that basic ROI, you use a brutally simple formula:

(Gain — Cost)/Cost = ROI

You can convert the end result to a percentage if you’re not into the whole decimal thing.

As a simple example, let’s say you sell an app that allows people to track how many apples they eat each day, so they can keep those ravenous doctors out of the way. After it’s shipped for a month, you’ve made $20,000 in sales for the app. To get to that point, it costs them $5,000 in paying for developer time and $5,000 in infrastructure charges (the back-end that analyzes the data, mashes it up with Facebook and Twitter profiles, and then sells that data to the Apple Sellers Association of Tomorrow takes some horse-power and storage!).

So, the ROI for the apple muncher app is:

($20,000 — $10,000)/$10,000 = 100%

A pretty good return on your investment! It’s certainly better than the rate I get on any of my personal investments.

So, what would be the ROI of introducing DevOps to that process? More importantly, how could you predict it? There are many ways to answer the ROI question, including the favorite “that’s a bad question, you shouldn’t want that” which can take on all sorts of subtle and helpful forms. Let’s look at three possible approaches.

1. Bottoms-up ROI: We know everything and have put it in this spreadsheet

If you have clear inputs and outputs — your gains and costs — then things can be realistically simple. This is the favorite approach of ROI spreadsheets: they’ll cost out software license costs, hardware/IaaS costs, and people costs (employees and consultants).

Once you’ve figured out costs, you need to estimate what your gains will be: either based on historic run rates, or, more likely, on a mixture of a prediction and hope for how much you’ll make in the future. Tracking the demand for software can be hard and this estimate is one of the most dangerous parts of this simple method. If all you want to do is track the ROI for saving money, perhaps things are a little easier. And while this implies that you’re not looking to DevOps to support a revenue growth strategy, perhaps that’s good input: if you’re not looking to grow your business, maybe it’s not right for you and will have negative ROI.

You then have to pick a period of time to snap-shot and you just run the math.

Of course, few, if any, of the things you’re costing out here are “DevOps.” You might spend money on a commercial continuous integration tool, on a cloud platform or a DevOps consultant. You’ll certainly spend money on people…but you didn’t really spend money on “doing DevOps.”

You might be tempted to simply ascribe gains to DevOps. “For this release, we were doing DevOps, and we made $30,000 with apple muncher! DevOps brought us $10,000 in new revenue.” But that doesn’t feel right.

Still, if you have a good handle on the costs during some period of time where you were doing DevOps and the gain that resulted from that period of time, you could come up with a bottoms-up ROI analysis. I think it’ll be somewhat dicey since it’s so hard to attribute costs and gains directly to DevOps but, hey, it’s better than either telling people they’re asking the wrong question or its mute cousin: nothing.

2. Were the efforts to change worth it? That is, DevOps is all cost

As you might be teasing out, one of the problems with ROI is that it doesn’t really take time into account. You need to draw clear lines around the time period in which you’re including the factors that create your gains and costs. (If you’re interested in an approach that does take time into account, check out Rex Morrow’s suggestion to use IRR instead of ROI.)

Using this lack of time problem as a generative constraint, you could instead study the ROI of changing to DevOps. What did switching over to DevOps cost us? What did it cost us compared to maintaining our current process state?

Here, you’re taking whatever your regular ROI calculation is and just adding the one-time cost of time and money it took to change to DevOps. Figuring out what your gain is will be problematic. Again, what you’ll be gaining are new capabilities (to deliver software faster and increase your uptime in production); how those contribute to gains is still left as a mysterious exercise to the reader.

Still, if you want to run the numbers on something like “they tell me it will take three months and $50,000 in training and consultants to ‘do the DevOps’” this might satisfy your ROI craving. Again, you’ll need to have a pre-existing ROI at hand to simply plug your DevOps costs into.

3. Pain avoidance and remediation

In the “DevOps is all cost” ROI scenario, we avoided ascribing gain to changing to DevOps. Again, while this is overly simplified, the deliverables of DevOps are to provide a continuous delivery process for your product and ensure that your product has excellent uptime (that is, “it works”). How could you account for the gain of those two desirables? You could create a way of assigning value to the knowledge you gain from weekly iterations about how to improve your product. You could also calculate the savings from avoided downtime.

It’s fun to model-out placing value on the first part, “knowing,” but most people asking for ROI will likely look at that as a “soft” metric and, therefore, not really useful to their “hard”-centric minds. Including money saved (or generated?) by avoiding downtime could be interesting in a point in time (if a trading system goes down, money is lost when no one can trade), but how do you account for it ongoing?

The issue with including DevOps in this “easy” type of ROI calculation is figuring out how much gain and cost to attribute to DevOps.

As with uptime, sometimes it can be easy: before we did DevOps, the system was down two hours a day, now it’s only down five –10 minutes a day, if at all. If there’s a pain you’re seeking to remove, then perhaps this model will work.

Your pain might also be “it takes us too long to deliver software,” which is a common problem for DevOps adopters. If you know how to measure the gain of time to market, for example, then you can do one of these bottoms-up ROI cases: “We were able to deliver a third release of apple muncher in two weeks instead of the six it had been taking. This means we could start charging for the new in-app purchases sooner, gaining us $5,000 more over that two week period.”

If you like this kind of figuring, check out Zend’s suggestion for how to do continuous delivery ROI for some inspiration. Like all “good” ROI calculations, it requires changing ROI around slightly to fit what’s measurable…and some good estimating.

So, can you send me a spreadsheet with all this in it?

I’ve deftly avoided actually giving you anything actionable here. Calculating ROI is a very numbers-, spreadsheet-friendly exercise and any answer should really include at least a starter spreadsheet to get you calculating things. However, as the above hopefully shows, it is indeed the case that asking for “DevOps ROI” is the wrong question. The “ROI” is getting the process and tools in place to create a better product. Obviously, as you rack up the costs associated with DevOps (both in money and time spent), you can start to model the overall ROI of the project versus the revenue and profit you generate, but there’s little DevOps specific about that.

Beyond such obvious answers, when I see people asking for “DevOps ROI,” what we can offer them is over-thinking like the above and examples of it working at organizations. Examples like Allstate and Humana are good mainstream cases, and you can listen in to more on the excellent Goat Farm podcast.

Additionally, I would suggest focusing on looking at DevOps as a continual improvement initiative rather than trying to predict ROI. Much of the problem with figuring out ROI is in having to predict costs and gains. Instead I would try to focus on tracking and trying to improve how you’re doing things in short intervals. In my experience, most organizations devalue the idea of continuously learning and trying to improve their process. Focusing on that might be a better use of time than summoning up a solid case for DevOps ROI.

I’d love to see examples of how you did an DevOps ROI case…or avoided it all together. If we can accumulate enough after-the-fact studies, then at least we could make a “rule of thumb” collection. Leave a comment below!

(I originally wrote this July 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

Here’s how we can help push DevOps into the mainstream

Can DevOps declare victory yet? Not quite, but soon.

Figuring out when a technology inflection point happens is always hard, if not impossible, in real-time. It’s easy to point backwards and say when ERP, agile software development, the Web, business intelligence, mobile or cloud suddenly became “normal.” I think DevOps is right at the door of that point, and as some recent Gartner predictions have proffered, we could see something like a quarter of all large enterprises using DevOps next year.

But it won’t be easy. The same house of industry sages also threw some cold water on that exuberance by predicting a 90% failure among organizations attempting to do DevOps if they fail to properly address process and culture.

As DevOps spreads to more and more IT shops, what can we in the DevOps community do to help? Clearly, we need to keep up the overall conversation about what DevOps is and the process/cultural changes needed to be successful. Another critical element is to start telling more and more stories of how non-technology companies are succeeding with cloud and DevOps. I think the recent Humana profile provides an interesting template here, as does Standard Bank’s forays into DevOps.

In addition to keeping up the good work, there are four key areas that will be helpful.

1. Define the goal properly

In one of my favorite straw-polls, groups who focus on the wrong outcomes and goals with private cloud have similar failure rates as those Gartner describes for organizations attempting to do DevOps.

What exactly those right goals are, for both DevOps and cloud adoption, is a new theory of mine I wanted to road-test with the DevOpsDays Austin crowd. As far as I can tell, the best goal of both a “cloud project” and “doing DevOps” is to do continuous delivery. So, cloud and DevOps let businesses set up the process and technologies needed to deliver custom written software on weekly, if not shorter turns, and actively study, learn and adapt software from the feedback of actual people using their software. This is the path of becoming a software defined business and DevOps is the definitive “how” of how that’s done.

To that end, I suggested to the audience in Austin that we should start, more or less, thinking of continuous delivery and DevOps as synonymous. Once you frame what DevOps is — what DevOps enables — as that, the conversation becomes crisper and, I believe, easier for everyone to understand and do something about. As I discussed last time, becoming a software defined business entails (a.) starting to think in a product-oriented manner (greatly facilitated by continuous delivery), and, (b.) ensuring that you have the overall cloud platform in place that provides the feathered, infrastructure bed for everything.

And, to add to the tracking of DevOps’ ascension to the mainstream, if you think of it as continuous delivery, some recent studies have shown that while overall CD use is low, growth has been ramping up year over year, just like DevOps.

2. Clearly explain the DevOps stack(s)

While even the best tools without the proper process (or “culture”) are ineffective, most people think in terms of stacks and tool-chains. So many of the DevOps conversations I’ve been involved in over recent years start by talking about tools and technologies. We’re in IT: it’s what we know.

I’d really like to see us start discussing common tool-chains and patterns of use (“cookbooks” to use an older, common programming documentation metaphor) for doing DevOps. Reference implementations even! Vendors do well telling you what they think the toolchain should be — please, oh please, feel free to ask me! ;). In fact, I’d say there’s almost an unhelpful amount of fragmentation in the infrastructure management layer at the moment: there are so many options that one can be left confused and overwhelmed.

Instead of letting us vendors define those stacks, I’d like to see the overall community get even more involved. Don’t be afraid to talk about tools in the face of all this culture talk! And don’t let us vendors steal the show.

3. Work with legacy code

Almost by definition, the IT shop at a non-technology company will be chock full of existing IT and “legacy code.” That’s the very IT that was once the growth-engine darling of the company and laid the foundation for where they are now.

As we all know but try to shy away from admitting too loudly, the new cycle of code and tech rarely works with the previous cycle’s code. I talk with companies almost weekly that are very interested in the question of how to integrate new cloud-native and mobile applications with five, 10, even 15+ year old centralized IT services. They want all the power of cloud and continuous delivery, but need help rationalizing and working with what they already have.

In my view, this is a conversation that doesn’t happen often enough in the DevOps community. It first starts with a — wait for it! — seemingly dottering old term: “IT portfolio management.” That is, taking the time to assess what IT you have and understand the business priorities around it. Without that kind of big picture, systems-based understanding of what you have, any whiz-bang awesomeness you get with DevOps will pale in comparison to the rumbling rebar-festooned concrete ball of legacy IT you have to deal with. (Damon Edwards gave a great talk right before mine introducing one method of getting down and dirty with portfolio management.)

There are many thought-technologies of how to approach this, from Gartner’s bi-modal IT approach, to some interesting work going on over at the Cutter Consortium. The point is to have the discipline and maturity to actually do portfolio management so you can start to improve everything and better prioritize your time and projects.

And, to point out the obvious: we need to start documenting how the application being written and supported by DevOps teams is integrating and co-existing with non-DevOps (or “legacy”) applications and services.

4. Keep up the Land-grab

As its name implies, DevOps has been on a land-grab mission that started back in the Agile days. If agile had gone the portmanteau route in naming itself, we might have seen DevQA, or even ProductDevQA. Agile development very consciously crossed silos and unified product management and QA with development, so much so that by the time we came up with DevOps, “Dev” represented all of those traditional roles.

Now, as companies are looking to IT and custom written software to help them become software defined businesses, DevOps-minded folks need to start thinking about how they can get more involved with “the business.” Do you know who these mythical business people are, what they’re worried about, how they think? Can you speak their language and help them learn yours?

To pick one very specific item that’s always a punji pit of IT despair: what KPIs and metrics should you use to communicate “up the chain”? (Ernest Mueller and Karthik Gaekwad have a great presentation on just this topic from last year.)

Think of it this way: what is the “API” for your business, and how can you start programming it…if not designing the API? Once DevOps is tightly integrated with the business side, and most companies are activity thinking about how custom written software can help run, grow, and innovate their business…then we’ll be able to declare DevOps success in the mainstream.

(I originally wrote this May 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

Software Defined Businesses need Software Defined IT Departments

(I originally wrote this April 2015 for FierceDevOps, a site which has made it either impossible or impossibly tedious to find these articles. Hence, it’s now here.)

Quick tip: if you’re in a room full managers and executives from non-technology companies and one of them asks, “what kind of company do you think we are?”…no matter what type of company they are, the answer is always “a technology company.” That’s the trope us in the technology industry have successfully deployed into the market in recent years. And, indeed, rather than this tip being backhanded mocking, it’s praise. These companies are taking advantage of the opportunity to use software and connected devices in novel ways to establish competitive advantage in their businesses. They’re angling to win customer cash by having better software and technology than their competitors.

What does it look like “on the ground,” though when it comes to “being a technology company”? I’d argue that the traditional ways we think about structuring the IT department is different than how technology companies structure themselves. To massively simplify it, traditional IT departments are oriented around working on projects, where-as technology companies are oriented around working on products.

The project-oriented IT department

Project oriented thinking takes in requests from an outside entity and works on solving an immediate, well understood problem. There’s often a definitive end to the project: the delivery of the new “service.” Project oriented thinking is good for creating an initial version of an application, installing and upgrading existing packaged software, setting up new offices, on-boarding employees, and other things that have a definitive completion date and well known tasks.

When organizing around this type of work, you setup a functional organization that can be assembled to implement the specific problem. Here, by “functional organization,” I mean groups of people who are defined by their expertise in something: networking, server administrator, software development, project management, audit and compliance, security, and so on. These people are typically shared across various projects as needed and usually are responsible for just what they know about. (For a very different take on how to use functional organizations, see Horace Dediu’s discussion of how Apple organizes itself.)

On-top of this, you take a request-driven approach to change management, which defines when to launch a new project or make “small” changes to an existing one (like adding a user). In the 2000s, we fancied up this concept by calling it “service management.”

Once the project is up and running, there may be something called “maintenance mode” which sees IT making sure, for example, that the ERP application has enough disk space available, that new users are added to the application, and that extra capacity is added when needed.

This mind-set is very handy when you’re dealing with keeping a bunch of products from tech vendors up and running. It’s even good if you have custom written applications that are not changed frequently. What’s also great, is that because each project is well defined at the start and has a definitive end, you can measure success and financial metrics easily: how many requests did we handle (tickets closed) this month? did we deliver on time? did we deliver on-budget? is the project profitable (thus, did we pay too much for that software or get a good deal?)

However, two things have been changing this state of affairs, pushing IT to be more exploratory in nature. As a consequence, the structure of the IT department will need to change as well to maximize ITs value to the overall business.

Cloud changes the organization into a software eating the world type situation

I often joke that it’s been impossible to see a keynote in recent years without seeing the horsemen of the digital apocalypse. These are the cliche topics that seem to come up in every keynote. Two of these lay the groundwork for why the structure of the IT department needs to change:

- Software is eating the world — Cloud technologies and practices have made huge improvements in productivity and costs when it comes to creating and running custom written applications. It’s easier to write and run software now, and the rise in “always on” devices (all those super-computers in our pockets that are on the Internet 24/7) creates a massive foot-print for computation: an endless buffet for software.

- Change or die! — with this huge buffet of opportunity, there’s a rallying call for companies to invent new business models that rely heavily on software. This means that most every business has the opportunity to use custom written software to change the nature of their business. Think of the opportunity for taxi companies to use software to change how they operate, or for the hotel industry to come up with a brand new business model to sell empty capacity…and you’re thinking of Uber and AirBnB. The “or die” part is a rhetorical trick to position this imperative as dire. And, indeed, studies have shown that remaining on-top has become harder in recent decades. Change is needed to survive.

These two alone create a pull for more custom written software in businesses. It’s fast and cheaper to create software, and competition is relying on that to create new business models that challenge incumbents or, rather, those businesses that are not evolving how they run their business with software. Again: think of all those taxi services versus Uber.

IT — SaaS = what?

There’s a third “horseman” in the broader industry that’s driving the need to change how IT departments are structured: the rise of SaaS. Before the advent of SaaS across application categories, software had to be run and managed in-house (or handed off to outsources to run): each company needed its own team of people to manage each instance of the application.

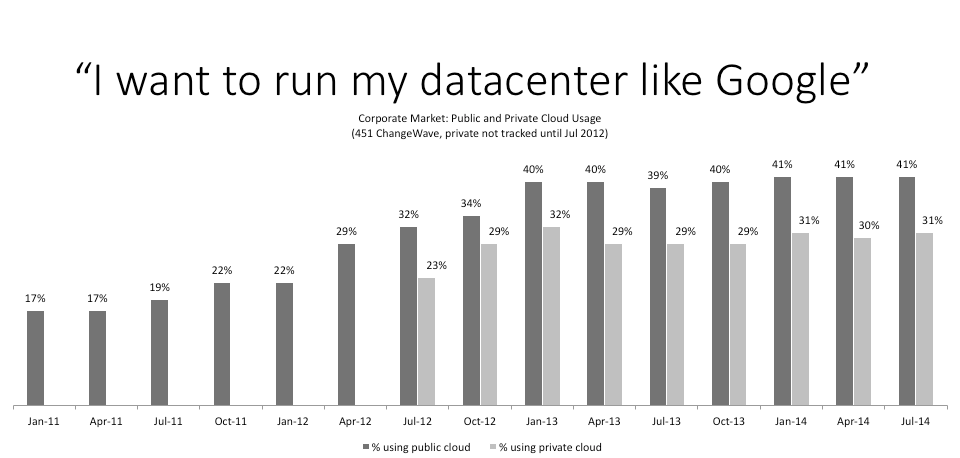

Source: Source: Two studies, first with 1,137 respondents, second with 1,097, involved in their company’s IT buying decisions participated in the Jan 2014 and July 2014 survey, including 470 and 445 whose company currently use public cloud. “Corporate Cloud Computing Trends,” 451 ChangeWave, Feb, 2014 & “Corporate Cloud Computing Trends,” 451 ChangeWave, Aug 2014.

As SaaS use grows more and more, that staffing need changes. How many IT staff members are needed to keep Google Apps or Microsoft’s Office 365 up and running? How many IT staff do you need to manage the storage for Salesforce or Successfactors? Indeed, I would argue that companies use more and more SaaS instead of on-premises packaged software, the staffing needs change dramatically: they lessen. You can look at this in a cost-cutting way, as in “let’s reduce the budget!” Hopefully you can look at it in a growth way instead: we’ve freed up the budget to focus on something more valuable to the business. In most cases, that thing will writing custom software. That is: developers.

The product oriented IT department

This is where the shift to thinking like a product organization is vital. First of all, if you feel the need to develop more custom software — as you should! — you’ll need to hire and train more software developers, product managers, QA staff, and related folks. You’ll also want to cultivate an environment where new ideas can be explored, user-tested in production, and then quickly refined in a loop that spans mere weeks if not one week. You’ll need to become a continuous delivery and learning organization. Jonathan Murray has called this type of organization a “software factory” and has explained how he implemented the change while at Warner Music. More recently, books like Lean Enterprise have explained how this type of thinking can be applied outside of “startup culture,” whose concerns tend to be more around achieving a high valuation to get the company acquired or IPO rather than building and maintaining sustainable business models.

Setting up an organization like this requires not only developers, but creating the actual “factory” that they operate in. I think of this factory as a “platform” and the folks responsible for standing up and caring for that platform are a new type of operations staff. They’re in charge of, really, providing the “cloud” that developers effortlessly deploy and run their applications in.

This new type of IT staff has to think about how they add in as many self-service and highly elastic services in their “cloud” as possible. They too are creating a “product,” one that’s targeted at the internal developer teams and which must continually have new features added to it.

Meanwhile, your developers will be arranged into product-centric teams, hopefully working more closely with line of business managers and staff who are helping craft and grow new applications. No doubt they’ll need operations skill on the team: staff who know how to properly architect and operationalize cloud-native applications.

This is where the now classic DevOps mentality comes in: in order to properly focus on a product, the team must be responsible for all parts of that product’s life, from development through production and back. With a proper cloud platform in place and the operations team to support it, these goals are more achievable than if the product team has to start from bare metal, or work with IT through a ticket system.

To be pragmatic, you probably can’t dedicate all people fully to a product and will need to share them. This carries large risks, however, namely, making sure you properly prioritize an individual’s time and realizing that they’ll have a harder time keeping up with fewer products rather than more. Quality and ability to deliver on time will likely decrease. It may seem like an impossible goal, but often in order to stay competitive — to survive — large, seemingly impossible changes are needed