Catch-up: yesterday, I went over everything you need for tech strategy and marketing.

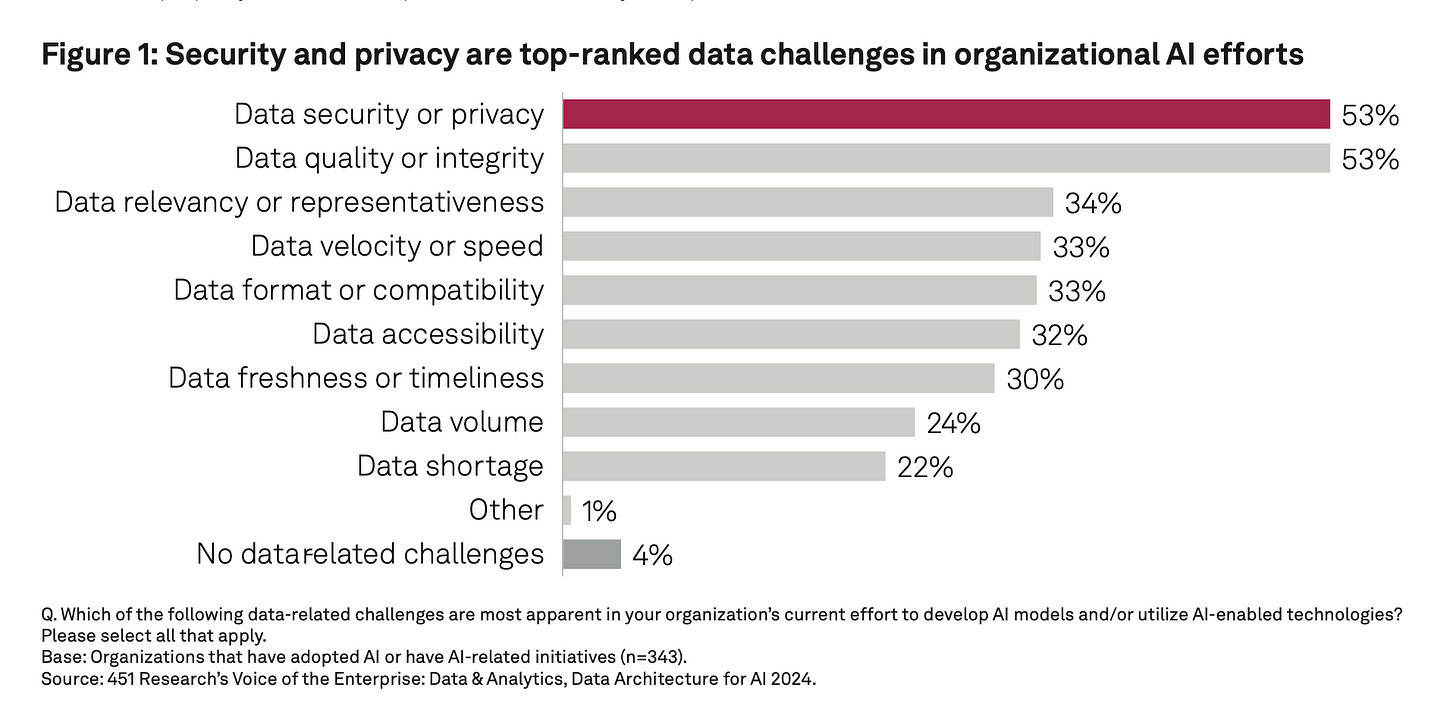

What do I know about security: limiting AI use in enterprises

I find the restrictions on using public AI chat things baffling versus the potential, but obvious benefit. But I don’t know the CISO perspective and way of thinking. What am I missing?

Yes:

But:

My theories:

It’s just too new and unknown, we don’t even know the risk and (is this a layman’s term?) attack vectors (e.g. Whiz findings). Better to lock it down and let others fuck around and find out (sidetone: I didn’t realize that we’d standardized on “FAFO” for that in polite conversation, which is lovely to know. Son of YOLO!).

The restrictions on AI use are move about costs and control/ambiguity of work product.

IP. If an employee pays for their own chat things, who owns the IP? With AI image generation, in the US at least, you have no (defendable) copyright on the generated images and video. I’m no lawyer, but it seems like that’d be easy to extend to text and code.

Costs. “We don’t want to pay $5 to $20 more a seat/month - what, in this economy?”).

Yes, and…so many work functions could get at least a 3x to 5x boost in “productivity” (or whatever figures du jour, I’m just swig-swigging those numbers). Or, maybe not. Then again, maybe yes! Me: If it’s good enough for tutoring, it’s probably good enough for knowledge workers.

My theory: I think CISO’s just don’t trust it because there are so many unknowns. Which is reasonable: there hasn’t been enough time to learn.

Plus, with Altman and Musk involved, you have batshit crazy people who are unpredictable driving the industry. But, you could just use Microsoft, AWS, and Anthropic. If you can get compute cheap enough, have enough ROI to do the capex and opex spend, or can profit form lower-powered/slower AI models, you could host it on your own and get benefit.

Yes, but…isn’t part of CISO risk modeling balancing out business benefit versus zeroing out benefits/potential growth by clamp-downing? Over the next two years if competing firms have looser policy, and they profit without tanking (or being able to pay for/live through risks and still profit/keep share prices high), don’t you lose anyways because [insert software is eating the world digital transformation tub-thumping we all used in the late 2010’s]?

(I hope you either (a) know me well enough, or, (b) intellectually wise enough to realize I’m not, at all, saying that security is a big deal. The point is to discuss the reaction and resulting strategy.)

Wastebook

“defiant jazz,” forever in our hearts.

“welcome our newest colleagues and look forward to the smell of Axe Body Spray in our elevators.” NTEU.

“technomancers,” The new arms dealers.

Relative to your interests

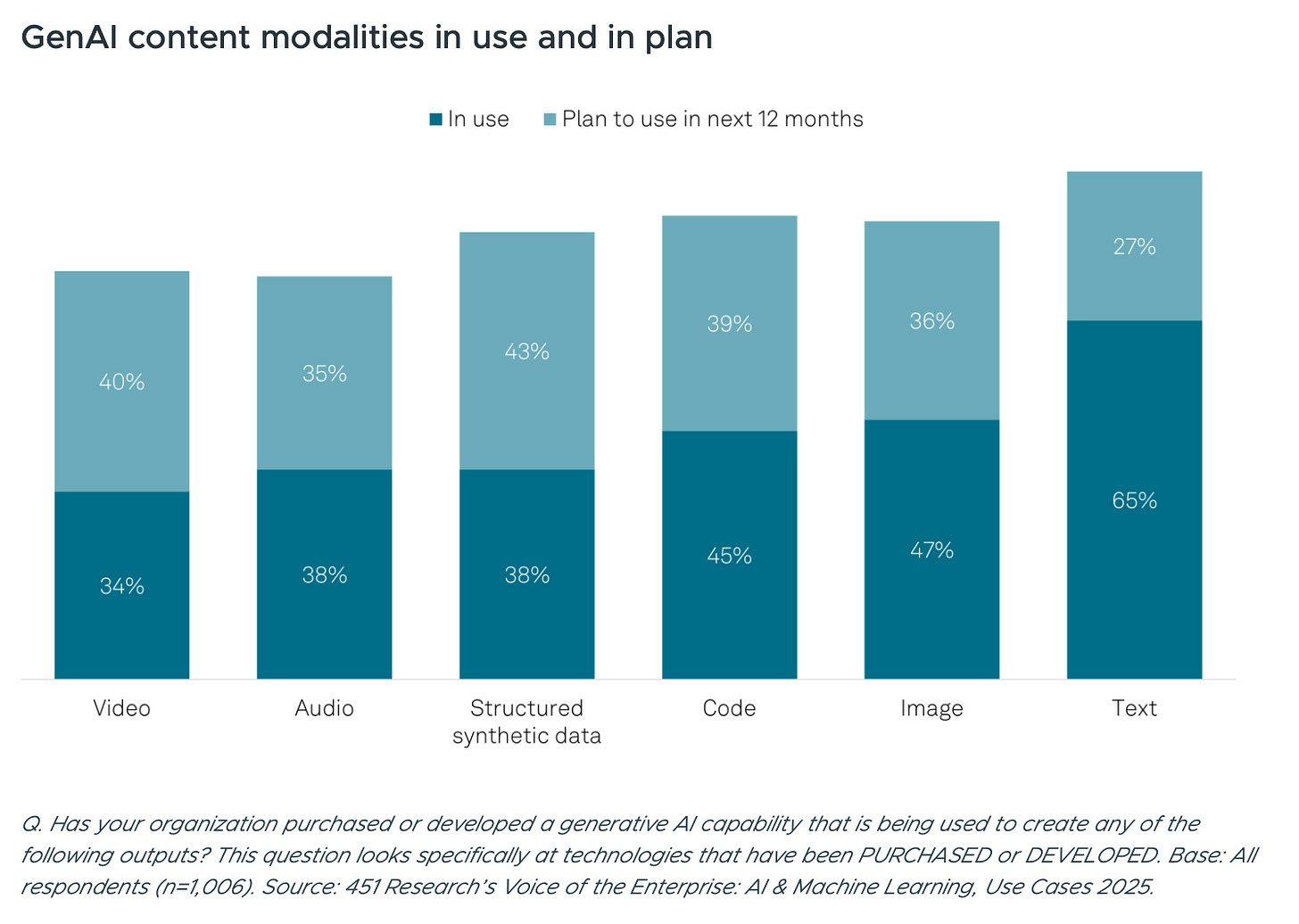

Emerging GenAI Use Cases and Spotlight on Secure Content Generation - If your AI stuff is using the same pool of knowledge as your competitors, you won’t get much competitive advantage. You need to add your own secret info. // “A common challenge, however, when employees use public generative AI tools or foundation models, is a lack of organizational specificity.”

Is Fine-Tuning or Prompt Engineering the Right Approach for AI? - As it says.

Stuck in the pilot phase: Enterprises grapple with generative AI ROI - ”More than 90% of leaders expressed concern about generative AI pilots proceeding without addressing problems uncovered by previous initiatives, according to the Informatica report. Nearly 3 in 5 respondents admitted to facing pressure to move projects along faster. ”

- Extending AI chat with Model Context Protocol (and why it matters) - Adding plugins to the AIs, the hope being that wide community of developers will form, extending the functionality of the AIs. I’ve seen this in practice with Spring AI and Claude and is very promising, and easy.

Do Marketers Need To Be Writing for AI? - SEO for AI model training. Yup, better start doing that. The good news is, all those SEO-trap pages that you generated (those long one you never actually show to users/customers) would probably work here…are working here. But, it’s likely a good idea to start doing more of this ongoing.

Moderne raises $30M to solve technical debt across complex codebases - ”A quick peek at Moderne’s customer base is telling of who is most likely to benefit from its technology — companies like Walmart and insurance giant Allstate. Its investor base includes names from the enterprise world such as American Express and Morgan Stanley, which, while unconfirmed, is safe to assume have invested strategically.” // From what I’ve seen and heard, seems like good stuff.

Context-switching is the main productivity killer for developers - #1 way to improve developer productivity, 30+ years running: stop interrupting them while they’re coding. // ”Research from UC Irvine shows that developers need an average of 23 minutes to rebuild their focus after an interruption fully.”

What the robot read

I often ask the robot to summarize articles for me that look interesting…but that I don’t want to read. Below are not the full summaries, but I asked it to write a Harper’s Weekly Review style summary for you, lightly edited by a meat-sack with said me comments in italicized brackets.

Russ Vought quietly reinstated a CFPB procedure essential to mortgage markets, ensuring that banks could continue pricing loans without improvising their own math.

Economists warned that high stock valuations may lead to a decade of low returns, an insight that Wall Street will process just in time to act surprised when it happens. [I don’t really get this one, but that’s the case with most long-term investor “logic,” or lack thereof.]

Some commentary of Infrastructure as Code found that most companies are still doing it wrong, proving once again that automation is only as good as the humans failing to implement it.

DeepSeek spent $1.6 billion on AI infrastructure, amassing 50,000 Nvidia GPUs in a move that may or may not justify the hype surrounding its capabilities. Investors watched as a $2 trillion AI market correction erased valuations faster than a chatbot dodging a direct question. [See above on investor’s “logic.”]

Microsoft, Meta, Alphabet, and Amazon continued their spending spree, ensuring that AI-driven margin compression remains a long-term feature rather than a short-term bug.

Spring AI promises to make generative AI accessible for Java developers, proving that some traditions—like running Java in the enterprise—never die.

Cobus Greyling declared that the future belongs to “agentic workflows,” a phrase that sounds revolutionary but, to my meat-sack friend, mostly means workflows with slightly more AI in them, in a good way.

A debate over AI optimization raged [seems a little strong?] between fine-tuning and prompt engineering, though most developers [or their corporate penny-pinchers will likely choose whichever option is cheaper that day.

Related: Adam Van Buskirk warned that in a world where all frontiers have been settled, destruction may be the only remaining path forward—an insight that AI companies and their burn rates appear to have already embraced. [See vintage novel and wastebook yes/and above.]

Conferences

Events I’ll either be speaking at or just attending.

VMUG NL, Den Bosch, March 12th, speaking. SREday London, March 27th to 28th, speaking. Monki Gras, London, March 27th to 28th, speaking. CF Day US, Palo Alto, CA, May 14th. NDC Oslo, May 21st to 23rd, speaking.

Discounts: 10% off SREDay London with the code LDN10.

Logoff

I need to think about this a lot more but if you (a) want to see some examples of ChatGPT Deep Research in action, and/or, (b) are interested in industry analyst strategy and M&A scenarios (here, with Gartner), check out these two reports I ran on Gartner’s business and strategy, in the SDT Slack. I printed out the whole chat session, so you can see my prompting, questions it asked, the first report, some back and forth, and then the second report. You can find it in the SDT Slack, or, you could jus check it out here:

I did not actually read all the pages, nor did I fact check it. Pretty interesting to see this kind of output though. I’ve used Deep Research for interview prep once so far: it wasn’t very impressive, but maybe that’s because I’d already done all the research myself, and the public info was slim.

Meanwhile, despite headwinds, IT seems to have done so far OK: