No links today, but this:

Measuring Developer Productivity

Once you suggest tracking an individual software developer’s performance, you get into big trouble with the thought leaders. This is, you know, pretty much a correct a response. McKinsey decided to have a go recently, giving us all a chance to think about “developer productivity” again. In recent years, I’ve mostly thought about developer productivity in terms of build and deploy automation - I know, just mind-blowing thrill rides, right! That’s cloud native for you! - but this new round has been more higher up the stack. Though, come to think of it, I don’t know if that scope was actually specified.

Anyhow, I think these are what’s on the current stone tablets of developer metrics:

You should measure the developer team, not the individual developer.

Management should only measure business outcomes, not development activities.

Metrics will be gamed by developers and misused by management, likely, with bad results for the business.

I’m sure this is, as Dan North likes to put it, “reductionist,”1 but, sure…?

Homework

For this week’s podcast recording (it’ll be here once published this Friday), I went back and read the McKinsey piece on developer productivity.

It seems…fine?

Like, so much so that I was wondering if the McKinsey people went back and edited after the big stink-up about it.

With that confusion in my brain, I re-read three things three times:

Gergely Orosz and Kent Beck’s two part rebuttal (part one, part two).

Dan North’s review notes on the McKinsey piece.

I think I get what the rebutters are so upset. Let’s see!

McKinsey’s Four New Metrics

The authors at McKinsey are looking for a way to measure developer productivity that the, like, “C-Suite” (“management,” as I’ll put it) can use to make decisions about software development in their organization. A good goal!

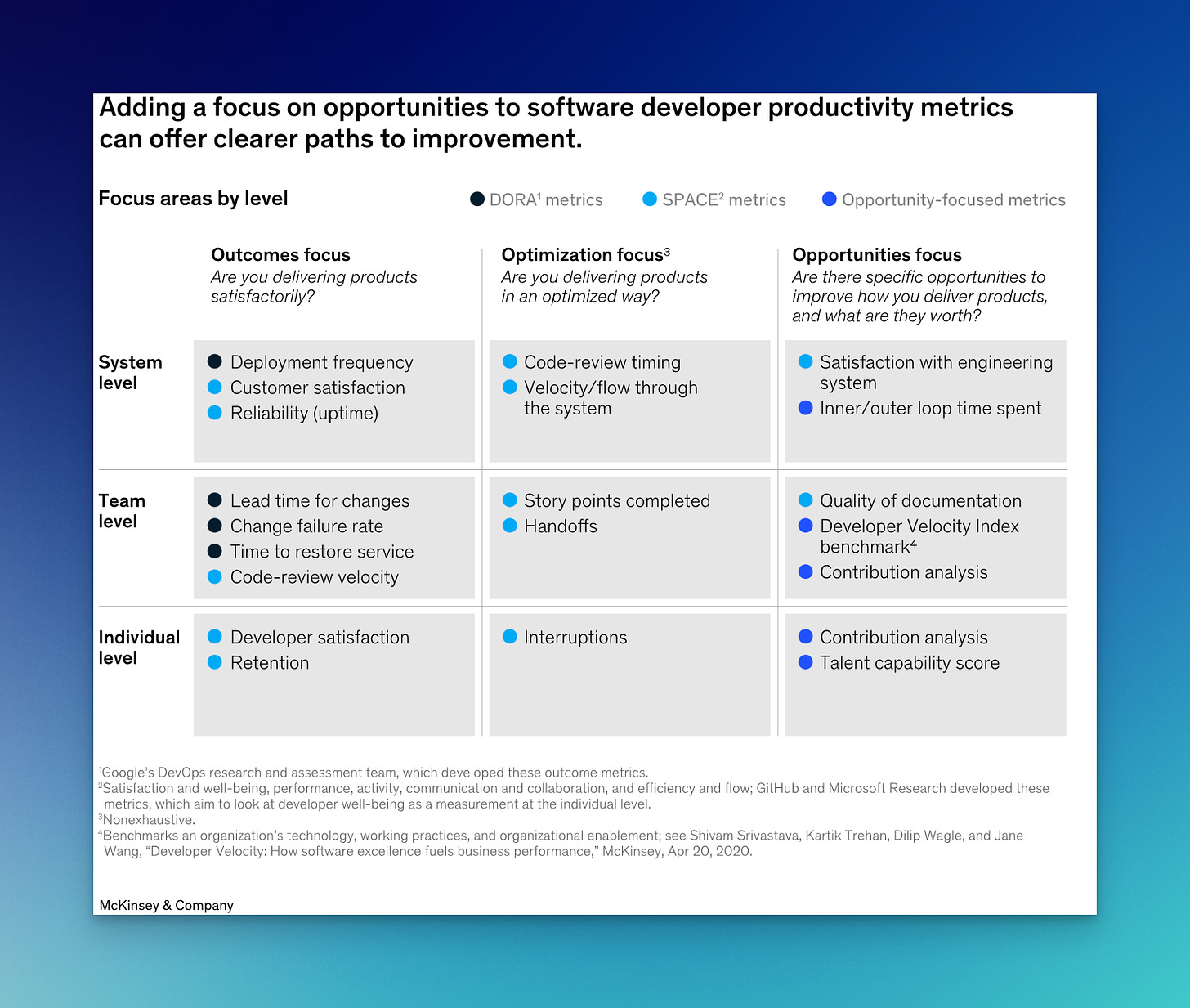

They start with using DORA and SPACE, and adding in four new metrics:

The new metrics are the - what would call that? - purplish-blue ones2 - from the article (I just included the quick definitions:

Developer Velocity Index benchmark. The Developer Velocity Index (DVI) is a survey that measures an enterprise’s technology, working practices, and organizational enablement and benchmarks them against peers.

Contribution analysis. Assessing contributions by individuals to a team’s backlog (starting with data from backlog management tools such as Jira, and normalizing data using a proprietary algorithm to account for nuances) can help surface trends that inhibit the optimization of that team’s capacity.

Talent capability score. Based on industry standard capability maps, this score is a summary of the individual knowledge, skills, and abilities of a specific organization.

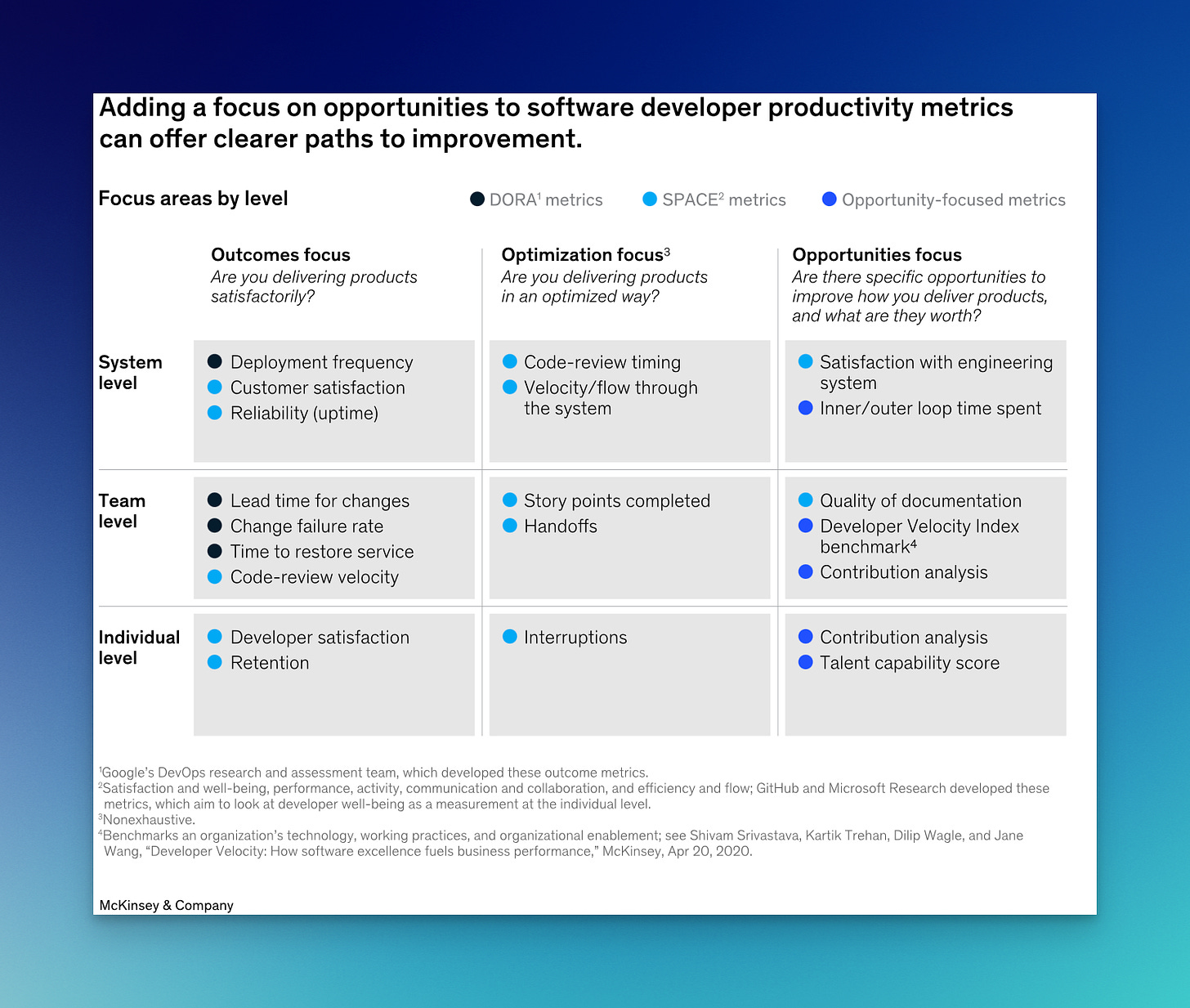

Inner/outer loop time spent. To identify specific areas for improvement, it’s helpful to think of the activities involved in software development as being arranged in two loops. An inner loop comprises activities directly related to creating the product: coding, building, and unit testing. An outer loop comprises other tasks developers must do to push their code to production: integration, integration testing, releasing, and deployment.

For the forth, I think what they should have meant was “developer toil,” but that inner loop/outer loop framing is so tempting.

Don’t Measure Individuals, Management Will Misuse Metrics

The reaction to this was not good. Here’s my summary of the sentiment based on the two rebuttals I read:

You should measure the developer team, not the individual developer.

Management should only measure business outcomes, not development activities.

Metrics will be gamed by developers and misused by management, likely, with bad results for the business.

Let’s look at each!

1. These business outcomes should measure the team, not the individual

This one is the foundation of the counter-arguments. Software development done well is very much a “team sport.” You know, there’s no “I” in “team” and all that.

It may be hard to detect from the outside, but software is not just the aggregate of individuals writing code. It’s like this: I don’t know what to tell you if you don’t just know that - you must not have ever been a developer and experienced it first hand?

For our metrics discussion, the consequence of this is that it’s very difficult to measure an individual’s contribution to the team.3 Rather…it’s a nuanced task. If you do allow people to specialize in certain parts of the code base and/or be “hero” trouble shooters then, like, it is easy to identify who’s important and valuable. Structuring the work so that it is individual based generally causes problems. Generally, allowing people to specialize like this is highly frowned upon.

Dan North brings up a more qualatative way to judge indivcidual performance:

If you are going to assess individuals in a team, then use peer feedback to understand who the real contributors are. I ask questions like “Who do you most want to be in this team, and why?”, or “What advice would you give to X to help them to grow?”, or “What do you want to most learn from Y?” An astute manager will quickly see patterns and trends in this peer feedback, both positive and negative, which are then actionable.

In this method, you are still rating individuals, just on how they help the team and their peer’s assessment of their skills. This feels right. If you’ve been on an application development team, I mean, you know that some individuals contribute more (“are more valuable” if you can stomach that phrasing) than others. You know who’s slacking off. You know who’s padding their estimates. You know who’s stopped learning. You know the bad performers, and the good ones. As a peer, you can also see through the “they’re not a failure, system has failed them” thinking. You know this because you’ll have tried to help them many times. You’ll have tried to change the system such that you can many times. They’ve taken advantage of the five free therapy sessions, and all that stuff. (Why just five? Does any HR department think that, like, any mental issues that are dampening my productivity can be solved in five therapy sessions? You’re going to need at least one just to introduce yourself and set context. Then you’re down to four hours. And, if you’ve been to therapy, you know you spend, like, half the time just meandering about as you and the therapist try to find something to talk about and how to talk about it. And then, even if you solve your problems in those four sessions, you need frequent reinforcement of the tactics you deployed to fit is. Five sessions is better than zero sessions, but if you’re concerned about the mental well-being of your staff damaging productivity, they’re going to need more. Which, I guess, you can, like, get from good health insurance if they provide it. Anyhow. Uh. I was talking about how peers in an application development team will know if someone is a low performer and can’t be helped further, good system or bad system…) And, like, you’re doing your job despite all the things…what’s their deal?

So, if anything, when it comes to individual performance metrics, base it on their peers. And, definitely, absolutely, don’t let management ever see those metrics, as we’ll cover below.

2. Management should only measure business outcomes, not development activities

As with a sales person, management should really just care about the business outcomes that application development teams produce.

“Business outcomes” are things like revenue (sales), cost savings, keeping the application up and running (performance, loosely put), and, though not really considered by the business much, overall application agility (how quickly and easily can you add new features or modify existing ones to change/help how the business runs). There’s always lots of other business outcomes, but you get the idea.

The McKinsey piece adds four metrics (above) that are based on the daily activities of individuals, and, worse from the perspective of the thought lords, there’s one that’s some kind of skills assessment. I didn’t seek out the definitions of these four new metrics much, so maybe they have tons of business value and team laced into them. I mean: they could!

To be fair, the McKinsey piece is not suggesting that these are the only metrics to track. They throw in DORA and SPACE into their overall metrics rating. Yay lots of metrics! Fill the dashboard!

Here’s all the metrics again. The new McKinsey ones are the “opportunity-focused metrics” ones:

There’s only a few “business outcome” metrics in there: customer satisfaction, reliability, lead time for changes, etc. Notably, none of the metrics are “made money for the enterprise,” or the like for non-profit/government organizations.

This is, really, the whole problem with “developer metrics.” All you really need to track is “did this software help the business?” That is, “business outcomes,” a phrase only just a little better than “business value.”

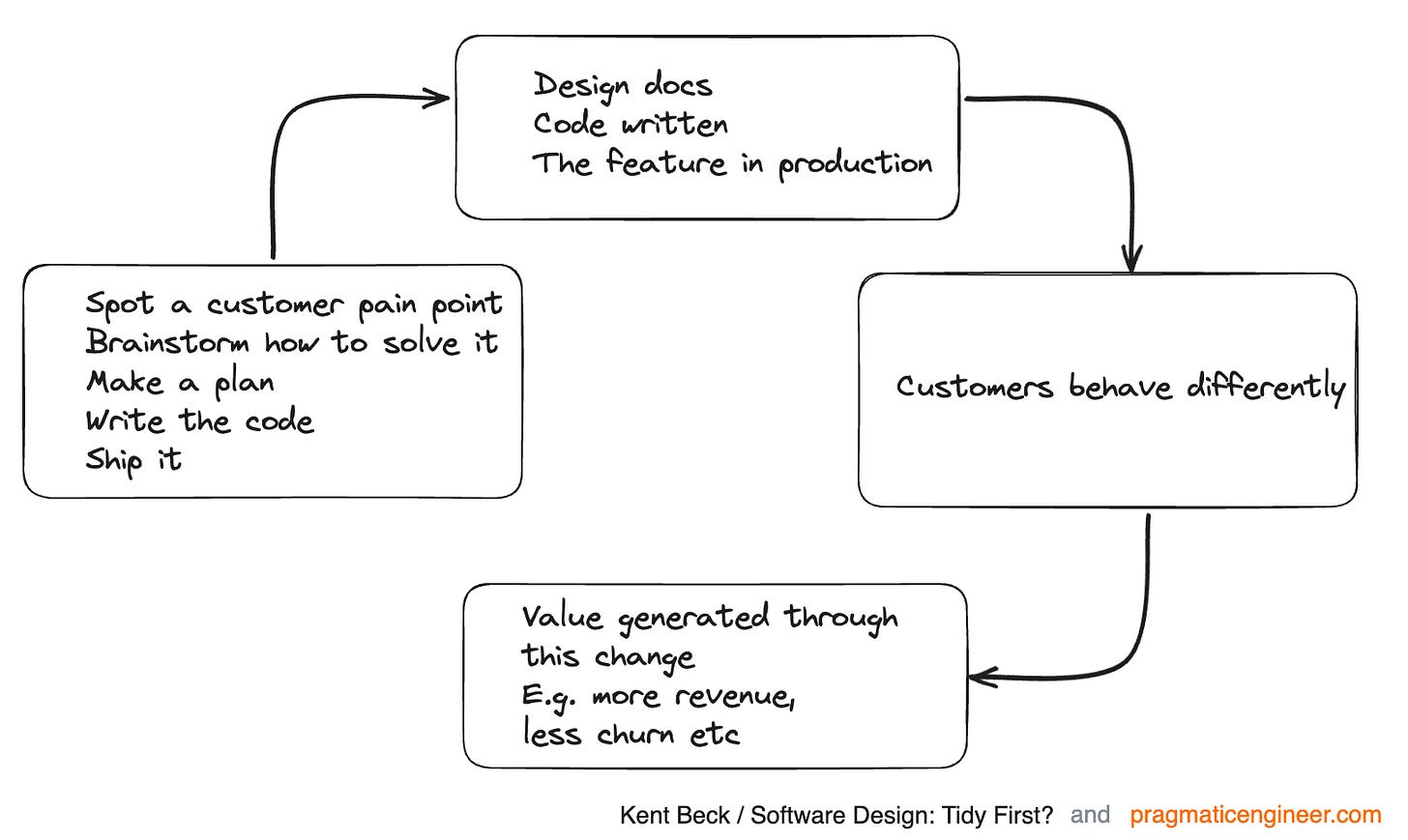

Gergely provides an example of how his team at Uber tracked the team’s business outcomes, which is awesome! The problem is that most organizations don’t track to these business outcomes, and I suspect it’s because (a) they just think of application developers as a factory4 that delivers apps to spec (you know, “waterfall”), and, (b) it’s hard to do.

If you can track the business outcomes of application development teams, that’s the only metric you need to track. Even if the team has “low performers,” who cares if the money’s good?

And, sure: measuring the business outcome of the team is what you should be doing. So, like, do that. It’s very difficult in most organizations, and not even considered a serious idea in not-tech organizations, as Gergely pointed out last year.

Even more wicked here, when you measure developer activities, figuring out the right activities to measure is difficult. So many things that a team (let alone an indivdual) does in development are unseen and un-trackable, as Dan North points out:

most of programming is not typing code. Most of programming is learning the business domain; understanding the problem; assessing possible solutions; validating assumptions through feedback and experimentation; identifying, assessing and meeting cross-functional needs such as compliance, security, availability, resilience, accessibility, usability, which can render a product anything from annoying to illegal; ensuring consistency at scale; anticipating likely vectors of change without over-engineering; assessing and choosing suitable technologies; identifying and leveraging pre-existing solutions, whether internally or from third parties. I am only scratching the surface here.

This is another one of those things that you only appreciate, and believe, if you’ve been a developer.

3. Metrics will be gamed by developers and misused by management, likely, with bad results for the business.

Given all of that - you should measure the business outcomes of the teams, you should measure the team not the individual - bad things will happen if you track individual performance. First, people will game the system and max out the activity based tracking. As the rebutters point out, if you value commits/PRs, developers will just make a bunch of tiny commits. And so on.

I find this gaming thing only half of the counter-argument. There’s whatever named notion that once someone knows how they’re measured, they’ll game the system. The second part that’s left off is that, yeah, sure, if management is dumb. We all know that metrics will be gamed, so you try to redesign the metrics ongoing and use them skeptically. There’s a spiral into cat-and-mouse games here, I guess.

Kent Beck points out an example at Facebook where that didn’t work out - and I think the outcome was they stopped doing it? Hopefully. On the other hands: that’s probably the least of Facebook’s problems, if they, really, even have any problems based on the shit-tons of cash they generate. Still. One should strive to be excellent, not just well paid. (At least, that’s what we should tell our management chain.)

But, like, does this mean we shouldn’t use the DORA metrics because people will “game” them? No, it just means use good metrics and adapt. Use metrics to get smarter about your qualitative (a fancy word for, I guess, “subjective,” rather, “your gut feel”) assessments.

This brings up the second part, here: you can’t trust management to use these metrics well…unless they understand how software is actually done. Gergely and Kent give a good, simple overview of exactly that.

So, the worry with the McKinsey metrics is that management will use them without understanding how application development is actually done. This means they’ll misuse them, either to fire people, not promote them, misallocate budget (giving too much to some teams and not enough to others), etc….all because someone didn’t break up their PRs/commits into small enough chunks. Hahahah - jokes! (But not too far off.)

What’d be better

As the rebutters all point out, what’d really be useful is to get management to understand how application development is done (above). With that understanding, you’d realize the above flaws and come up with some better metrics…or indicators and health checks.

The DORA metrics are trying to predict business outcomes without actually knowing the business outcomes (revenue, etc.). They’re saying “if you do well at these four metrics, then it’s possible for the business to do a good job…so…hopefully they do that.” Hey, hey! SILOS. Local optimization! FAT BOY SCOUT! But, you know, that’s probably fine.5

So, I think it goes like this:

If you could tie the application development teams work to actual business outcomes, then you’re all set. And if you can’t, you should figure that out.

Else, if you can’t do that, you should use the DORA metrics to measure your own local optimization.

Else, if you can’t do that, you should only rate individuals based on their peer reviews.

And, if you can’t do that, you’re working in an unenlightened, possible even toxic work environment.

And, if you can’t get a new job (or are adept at working in that system and maximizing your take with reasonable long-term stability), whatever you do, if you have the data on individual performance, first, destroy it and stop tracking it, and, second, do not let management outside of development see it.

And, if you have no idea what’s going on, must keep your commits small and your lines of code voluminous and you’ll probably be fine.

Next week, on October 17th, a whole passel of my team mates and I are hosting an online SpringOne Tour. It’s free to attend, of course. If you can’t make it to one of our in-person events, check this one out. There’s over 20 talks you can choose from, including mine on platforms.

If you’re a programmer - especially a Java programmer - or doing anything with cloud native apps, there’s something in it for you. Register for free, and check it out on October 17th.

Inner Loop/Outer Loop

In his write-up about developer productivityDan North has a discussion about the inner loop/outer loop model. Here’s McKinsey’s diagram:

As he points out, it seems to be in conflict with the “shift left” mindset. Without typing too much about it: yes?

In our cloud, DevOps, cloud native world, I don’t think we’ve every figure out a good answer to the question “what are things application developers should be doing?” These would be things the application developers should be automating, or letting other people do.

Unless you’re dhh, your application developers should not be racking and stacking servers. They probably shouldn’t be writing their own container management systems, nor working with kubernetes directly instead of layering a buffet of CNCF landscape on-top of it. They should probably not being creating your security risk models? If you’re of the Heroku/Cloud Foundry mindset, they shouldn’t be deploying their applications to production.

I’m blowing up the scope of inner loop/outer loop, sure. But defining what application developer “toil” is versus is not is both very clear (building clouds) and not very clear (doing compliance audits - not a great example?).

All models are flawed, just some better flawed than others. For the McKinsey piece, if you just replaced the inner loop/outer loop talk with “toil,” I think you’d be cool. And, of course, you’d need a footnote that said “one application developer’s toil is another application developer’s competitive advantage.” '

What we want to get at is something more like: there’s some stuff that’s a waste of time for application developers to do, and they should not do those things.

For example, Dan North points out that your path to production and infrastructure stuff (“A fast, automated release pipeline is a key prerequisite for frequent releases, and skill in defining, provisioning and evolving the infrastructure to support this is a differentiator”) is incredibly valuable.

Like, big yes (my monthly paycheck depends on that infrastructure being incredibly valuable!): but that’s probably the work of a team other than your application developers. That seems to be the case at the tech company darlings that have separate developer tools and platform groups.

Upcoming

Talks I’ll be giving, places I’ll be, things I’ll be doing, etc.

Oct 17th SpringOne Tour Online (free!), speaking. Oct 10th, 17th, 24th talk series: Building a Path to Production: A Guide for Managers and Leaders in Platform Engineering Nov 6th to 9th VMware Explore in Barcelona, speaking (twice!). Nov 15th DeveloperWeek Enterprise, speaking.

Discount code for KubeCon US - while I won’t be at KubeCon US this year, my work has a discount code you can use to get 20% off your tickets. The code is: KCNA23VMWEO20.

Management Misalignment

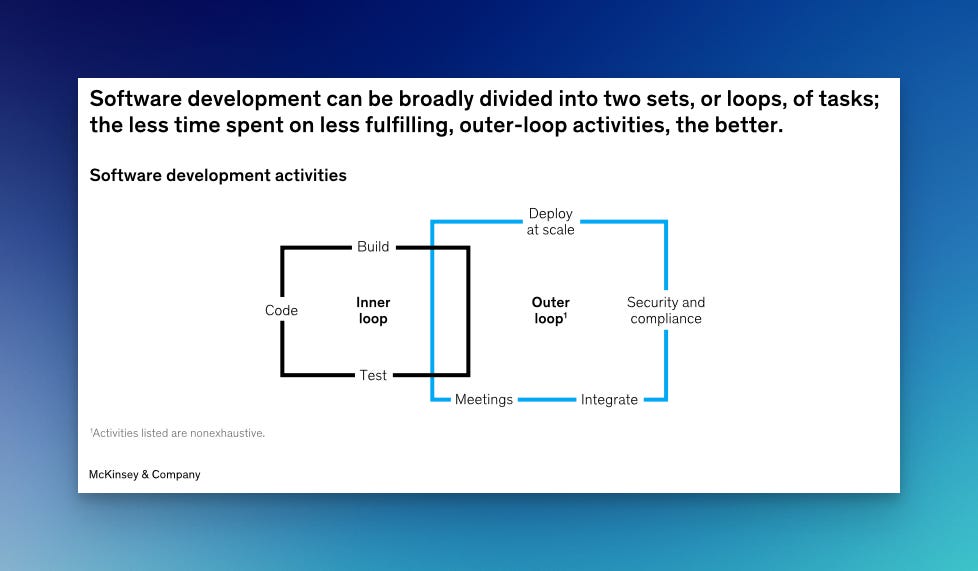

Related to developer productivity, charts/surveys that silo handoff problems are always fun. Here’s one from Cat Hicks and crew:

Schedule (“timeline”) is always a problem and misalignment. But, schedule is a problem in all walks of life. It’s not only developers that are bad at time estimates, it’s everyone.

Budget and cost is not shown (maybe “lack of technology resources” is a proxy?): management always wants to pay less (more for the shareholder and, thus, themselves). Again, as in life, so in business: "I'm glad I paid more than I had to," said no one ever.

Getting the requirements right is difficult, but a lean design approach can help: the business should take part in this and use the agile advantages of software. If you do software right, you can experiment and develop how the business functions: you don't have to get it right the first time. E.g., the commercial kitchen pivoting to measuring mayonnaise. This idea of "pivoting" is key and needs to go up to the business. It's a pun, right: Pivotal Software.

This last point is getting us closer to the business output dream. The enlightened stage of understanding how software is done comes when you get that you don’t have to be perfect each release. And, in fact, that is not good. If you’re not getting your “requirements” wrong frequently, you’re not trying hard enough to come up with new things and improve existing one.

I don’t know sportsball, but my understanding is that they don’t get the ball in the goal every single time they try. And this is considered a-OK, just fine. The hockey puck quipper also said: "you miss 100% of the shots you don't take." So, what if I told you with you software you could take, virtually, unlimited shots? Yeah. SOFTWARE.

Wastebook

“Might-could be fixin’ be real peachy-like.”

Sometimes you don’t squeeze to get the juice.

“You know me: I don’t wear velcro shoes.”

Logoff

Per above, I have the second installment of the the path to production talk series coming up next week. There’s something scurrying around in this developer productivity talk that gets real close to what I want to discuss: what does it mean to think of and use your software “factory”6 strategically?

If we know the wrong metrics to monitor, and we know the right ones, that should be some kind of trailing(?) indicator of how to correctly think about software. We’ll see! Also, we were going to cover some OGSM thinking, which I’ve looked at many times and am always left bemused and puzzled.7

Also, yet again, as ever, ThoughtWorks has written the definitive piece on all of this before us Pivotal/Tanzu people can get around to typing it up. No, really, it’s great stuff.

You should register for it and watch it - all for free, you know.

Finally, a rare Garbage Chair of Amsterdam, Duivendrecht edition:

🎶 Suggested mid-week outro.

I’m pretty sure when people use the word “reductionist,” it’s a synonym for “stupid,” or, at the very least, “wrong.”

My color dropper thing says it’s #1c51fe. Pretty nice to look at, actually.

This is especially true if you use the practice of rotating pairing (which most people don’t, let alone pair programming) where you have two people work on the same thing, and have them switch at least two times a day, moving to a different part of the code base. Pair programming has many advantages: you avoid people specializing in one part of the code base, which also avoids people being ignorant of other parts of the code base (thus, doing some bus number risk management as well). You train people on the job, both senior to junior, and junior to senior. And so on!

For future management consulting blog writers, “factory” is another word the thought leaders get all upset about. The US military can call what they do a factory - I guess you don’t want to argue with that lot - but you can’t apply that word to software developers. The thinking goes that a factory stamps the same thing out over and over again, there’s no creativity. Sure. What that misses, though, is that the developers are the ones using the factory, all of their tooling, the platform they use, the processes they follow - those are generally more static, like a factory. If you consider the developers themselves as “the factory,” then the term is shit (who wants to be reduced to a factory?). I think the work “factory” is great for all that stuff below the application developers. But, then again, the platform engineers (or DevOps engineers…or whatever) will probably be upset at being called a factory. Real org-chart geometry diction quagmire there.

I suppose this might make the product managers out there sad. But, again, hey, if you have product managers on your team, then you’re probably a-OK: your product manager should have an ongoing idea of the business value you’re actually creating with the software.

Yeah, yeah. See footnote above about “factory.”

Usually, when I read these kinds of “operationalize your business strategy real-good-like” frameworks I think “yeah, if I had the capability, knowledge, and corporate will to do that kind of modeling…I wouldn’t have a problem in the first place.” But, I haven’t really given the OGSM thing time. And, you know, what do I know: I just make slides.